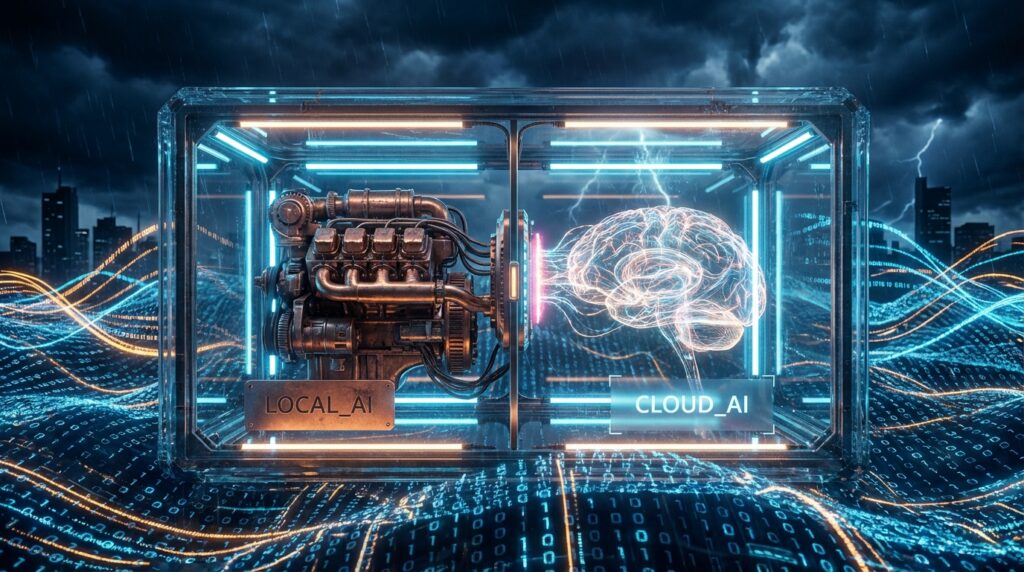

The architecture in this image might not look sexy, but this is the “sewerage engineering” of AI. The combination of E2B and a cloud runtime means Agents finally don’t have to “relieve themselves” all over your laptop.

It is February 2026. Drone delivery outside the window has long become the norm, yet looking at our GitHub trending charts, some things remain as stubborn as fossils.

Even today, the vast majority of developers’ AI Agents are still like unweaned babies—living exclusively inside Localhost. We watch them spit out characters in the VS Code terminal, but the moment the laptop lid closes, these intelligent agents that claim to “change the world” suffer immediate brain death. Worse still, just to get them running, your development environment has been turned into a garbage dump by endless pip install commands.

At this juncture, the emergence of vm0-ai/vm0 is like a germaphobe geek pulling your messy Agent out of the chaos and throwing it into a sterile room called a “Cloud Sandbox.”

This isn’t a story about models getting smarter; this is a real estate issue regarding “where models live.”

01. Necessary “Isolation”: Putting a Hazmat Suit on the Agent

If anyone still dares to run untrusted Agent code directly on their primary machine, I can only respect you as a tough guy—or perhaps just hard-headed.

The core logic of vm0 is highly counter-intuitive: It doesn’t care what the Agent chats about; it only cares about how the Agent might “explode.”

Look at its architecture documentation; the keywords are all Firecracker, Containers, and Isolation. This is the “depth” I’m talking about. During the Agent boom of 2025, countless demos died on stage due to environmental dependency conflicts. vm0, however, did something extremely boring but correct: Environmentally standardized Cloud Sandboxes.

This isn’t as simple as just “throwing code onto the cloud.” Pay attention to that inconspicuous Commit:

refactor(runner): replace Python vsock-agent with Rust implementation

This single line reveals a massive amount of information. Python is glue, but Rust is concrete.

When a project starts rewriting the underlying vsock communication module in Rust, it shows it is no longer satisfied with just “working,” but has begun to pursue “millisecond-level survival instincts.” In the deep waters of virtualization communication, Python’s interpreter overhead is an original sin. This cut by vm0 slashes latency and trades it for determinism under high concurrency.

To put it plainly, previous Agents were living in Airbnbs, where the environment depended entirely on the landlord’s mood; the current vm0 puts Agents into standardized capsule hotels—the space isn’t huge, but water, electricity, and internet are fully connected, and it is absolutely safe.

02. Blind Spot: The Underrated “Skill Native”

Most people stare at vm0’s “sandbox” but miss its more ambitious move: Compatibility with skills.sh.

In the README, it’s just a short line: Compatible with 35,738+ skills in skills.sh.

But this is precisely the craftiest (in a good way) part of the entire ecosystem.

Over the past two years, we’ve witnessed too many “tool protocol wars.” OpenAI has its standard, Anthropic has theirs, and even every startup wants to define “how an Agent calls a weather API.” The result? Developers died of exhaustion on the road to writing adapters.

vm0 chose a “lazy” but smart path: Don’t reinvent the wheel, just fix the highway.

It directly reuses the existing skill ecosystem. This means an Agent running in this sandbox is born with 35,000 distinct capabilities. This isn’t technical innovation; this is a dimensional strike of commercial logic. It bets that future Agents don’t need stronger models, but richer “hands” and “eyes.”

Furthermore, look at its recent integration of minimax-api-key, and even native support for Claude Code. This kind of “promiscuous” broad compatibility is exactly what infrastructure should look like—I treat all models well, but I belong only to myself.

03. Industry Cold Shoulder: The Ghost of Docker and the Shadow of AWS

If we put vm0 under a microscope and compare it with the industry behemoths, it gets interesting.

Some might say, “Isn’t this just a wrapped Docker?”

If you think that, you might be underestimating the destructive power of LLMs on context. Docker solves dependency issues; vm0 solves Persistence of State and Context.

Traditional Docker containers are stateless—run and discard. But an Agent is a continuous conversation, a sustained workflow. The Persistence emphasized by vm0 allows you to interrupt, resume, or even Fork a running Agent session at any time. It’s more like installing a “Time Travel” button on Docker.

Compared to Serverless solutions like AWS Lambda, vm0 seems to “understand” AI better. Lambda is designed for short tasks; cold starts are its nightmare. vm0’s architecture is clearly prepared for Long-running Processes—after all, you can’t expect your Agent to be half-way through writing code only to have the process killed by the cloud provider due to a timeout, right?

However, don’t celebrate too early. Compared to the bottomless compute pools of the giants, vm0 currently looks more like an exquisite “showroom.” Cloudflare‘s edge computing nodes are indeed fast, but can they withstand the pressure of state synchronization during massive Agent concurrency? That remains an unknown.

04. Unfinished Thought: When the Sandbox Becomes a Prison?

Since we are talking about sandboxes, we must mention a slightly philosophical concern.

vm0 provides perfect isolation, but will isolation stifle the Agent’s creativity?

The ultimate form of an Agent is to “walk freely in this digital world.” When we lock it inside a Firecracker micro-VM, restrict its network permissions, and standardize its system calls, are we protecting the world, or are we castrating AI?

Imagine an Agent designed for “self-evolution”; if it doesn’t even have permission to modify its own runtime environment (locked by vm0’s security policies), can it still evolve?

Perhaps one day, we will see vm0 release a feature called Jailbreak Mode, allowing high-level Agents to break through the sandbox—but that is a story for another time.

05. Final Words

Looking at vm0’s code commit history, from Initial commit to the current Rust refactor, I see a trend returning from “fanaticism” to “engineering.”

In 2026, we no longer gasp because AI can write a limerick. We start caring about whether it can automatically restart at 3 AM, whether it can degrade gracefully when VRAM overflows, and whether it can refrain from leaking private keys to the public internet.

vm0 might not be the product that gets your adrenaline pumping; it’s even a bit boring—boring like a sewer system. But it is precisely this boredom that supports the prosperity of the city.

Stop letting your Agent streak in Localhost. The outside world is exciting, but it is also truly dangerous.

References: