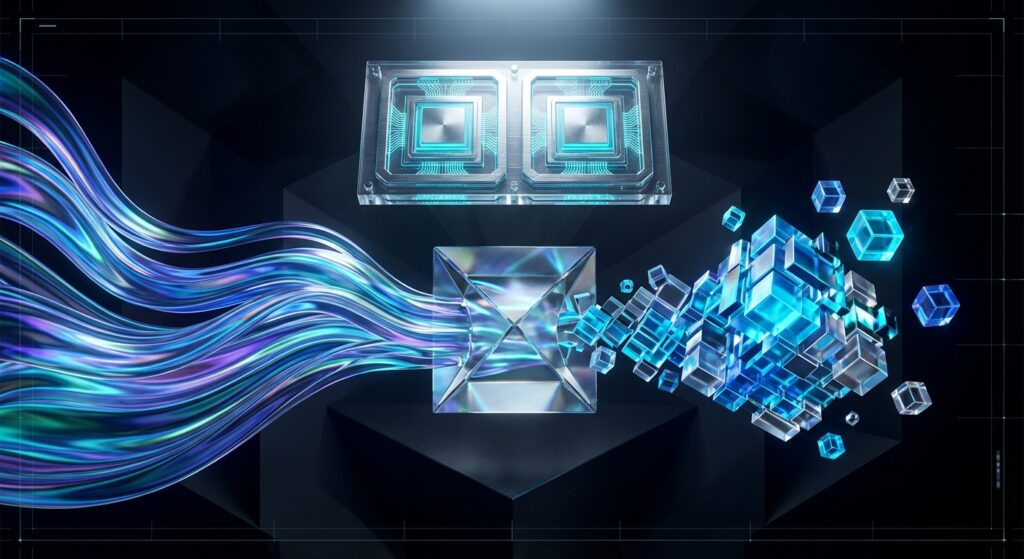

This diagram illustrates not just an architecture, but a “brain surgery” on Large Models: the neural network on the left handles thinking, while the Engram lookup table on the right handles memory—distinct and separate.

DeepSeek’s repository quietly added a new line of code, with the commit timestamp frozen in January 2026. This time, they didn’t release a larger model; instead, they took out a scalpel and performed major surgery on the core of the Transformer.

This module, named Engram, looks at first glance like something dug out of a pile of old papers from the 1990s. N-gram? Isn’t that an antique from when Natural Language Processing (NLP) was still playing with statistical probabilities?

Don’t scroll away just yet. This is actually incredibly interesting—while every Silicon Valley giant is frantically stacking parameters, trying to compress the entire internet into GPU VRAM, DeepSeek suddenly snapped its fingers: “Why make Einstein memorize a dictionary?”

This is the essence of Engram: it attempts to solve the most awkward dilemma of large models—the “integration of storage and computation.”

Deep Insight: “Brain-Computer Separation” for Neural Networks

Our current LLMs live a very exhausting life. They have to be both the CPU responsible for logical reasoning and the hard drive storing massive amounts of knowledge. All knowledge—from the “Pythagorean theorem” to “how to make Kung Pao Chicken”—is compressed into those hundreds of billions of floating-point weights within the neural network.

There is a huge bug here: Static knowledge is dead, but neurons are alive.

When you ask the model, “What is the capital of France?”, it has to mobilize a massive neural network to “calculate” the word “Paris.” This is tantamount to using a nuclear reactor to boil water. DeepSeek’s engram_demo_v1.py reveals their solution: Conditional Memory.

The Engram module essentially installs an “external hippocampus” onto the Transformer. It utilizes modernized N-gram embeddings for table lookups. Simple knowledge retrieval (Recall) goes directly through the Engram channel, while complex logical reasoning (Reasoning) goes through the MoE (Mixture of Experts) channel.

The logic behind this is incredibly sexy: Not only can computation be sparse (MoE), but memory can be sparse too.

The “U-shaped scaling law” mentioned in the references is the mathematical ironclad proof of this logic. DeepSeek discovered that with a fixed total parameter count, if we blindly invest all resources into the neural network (MoE) or all into static memory (Engram), the results are suboptimal.

Only by finding that golden ratio between the two can the model achieve a performance leap in code, mathematics, and logical reasoning. Put simply, the best brain uses half its capacity for thinking and half for looking things up, rather than rote memorizing everything.

Independent Perspective: Retro-Futurism

What makes this ironic yet fascinating to me is: To reach the future, we are frantically excavating the past.

What is an N-gram? It is the cornerstone of statistical language models. Before Transformers appeared, we generated text by calculating the probability that “a” follows “this is.” Later, the Transformer swept everything away with the Attention mechanism, and N-grams were swept into the dustbin of history.

But DeepSeek picked it back up.

This isn’t a technological regression, but a spiral ascent. Engram is not a simple N-gram; it is a “vectorized” and “differentiable” N-gram. It is no longer a simple probability table, but a memory block that can participate in gradient descent and be “trained.”

There is a blind spot here that is rarely noticed: Effective Depth.

There is an inconspicuous sentence in the references: “Engram relieves early layers from static pattern reconstruction.”

Translated into plain English: Previously, the first few layers of a model were busy spelling words and recalling idioms, leaving no time for logical thinking. Now, Engram has taken over these dirty chores, finally freeing up the neural network layers to handle true “deep” reasoning.

It’s like when you used to take exams, spending the first 30 minutes writing down formulas from memory. Now, DeepSeek allows you to bring a formula sheet into the exam hall. Tell me, won’t your problem-solving ability skyrocket?

Industry Comparison: RAG is a Patch, Engram is Genetic Engineering

Seeing “external memory,” you might think of the RAG (Retrieval-Augmented Generation) that is everywhere these days.

“Isn’t this just attaching a knowledge base to the model? What’s so special?”

There is a fundamental difference. RAG is a physical attachment. The model generates halfway, stops, searches a database for a paragraph, pastes it into the prompt, and then continues generating. It is slow, rigid, and disjointed.

Engram is biological fusion. It is part of the Model Architecture. That N-gram lookup operation happens within the millisecond-level instant of model inference and is directly fused with the Hidden States.

As seen in this comparison chart, under the same parameter scale, the architecture-optimized model achieves a dimensional strike against traditional architectures across multiple capabilities.

Contrast this with a certain top Silicon Valley giant still frantically expanding the Context Window, trying to let the model read 1,000 books at once. DeepSeek’s approach is clearly more “geekily cunning.” Expanding the window is brute force; not only is it expensive, but inference costs grow quadratically. Engram, however, uses Deterministic Addressing to throw massive embedding tables into Host Memory.

What does this mean? It means you can run a model with an enterprise-level knowledge base on a consumer-grade graphics card, because the massive “memory” doesn’t need to occupy expensive VRAM—it just needs to sit on standby in the system RAM.

This is the dimensional strike against business logic: It lowers the “holding cost” of knowledge.

Unfinished Thoughts: Pluggable “Soul Chips”?

Extrapolating from Engram’s logic, a crazy idea emerged.

Since memory (Engram) and reasoning (MoE) are decoupled in architecture, will future models become a “Console + Cartridge” mode?

Imagine you own a DeepSeek-Core with extremely strong basic reasoning capabilities (only 10B parameters, pure logic brain), and then, depending on the task, you insert different Engram Cartridges:

- Writing code today? Insert Python-Engram (50GB).

- Seeing a doctor tomorrow? Swap in Medical-Engram (100GB).

- Going to court the day after? Swap in Law-Engram (80GB).

If this “pluggable intelligence” comes true, it will completely change the business model of model distribution. Currently, a model is a monolith—take it all or leave it. In the future, perhaps what we sell will no longer be models, but highly refined and compressed “Engram Memory Packs.”

If we get even bolder, does this mean we are moving closer to “digital immortality”? If a person’s life experiences can be compressed into an Engram pack and plugged into a general-purpose AI brain, would that AI be considered to possess that person’s “soul”?

Final Words

DeepSeek’s update, on the surface, is the open-sourcing of a technical module, but in reality, it is a correction of the Transformer’s brute-force aesthetics.

It reminds us that intelligence is not equal to memory, and certainly not equal to the piling up of parameters.

In today’s world where AI compute cards are hyped as financial assets, Engram is like a clear stream (or perhaps a turbulent current). It proves that within the microscopic structure of algorithms, there are still huge gold mines waiting to be excavated.

Sometimes, to go further, we really need to look back at the scenery we’ve forgotten. N-gram is not dead; it just put on a more cyberpunk vest and returned to the center stage.