01. Bill Anxiety and the “Stunt Double”

Have you ever had this experience: just to test a simple for loop logic, you unknowingly called the Claude API dozens of times, only to feel your heart bleed when you saw the bill at the end of the month?

Just a few days ago (January 27th), the Docker team delivered a painkiller to all developers suffering from “API Anxiety.” Docker Model Runner (DMR) now officially supports the Anthropic Messages API format.

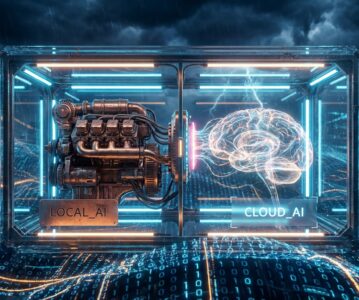

Simply put, Docker has set up a “stunt double” on your local computer. When you write code, you still use the original, expensive Anthropic SDK and send standard /v1/messages requests. However, inside Docker’s black box, the one answering the call isn’t Claude across the ocean charging by the Token, but a hardworking, free open-source model (like Mistral or Llama 3) living right on your hard drive.

This isn’t just about saving money. It means you can continue polishing your AI applications on a disconnected flight or in a coffee shop corner with terrible signal. Without changing a single line of code logic, you can seamlessly switch from the “local budget version” to the “cloud flagship version” just by changing an environment variable.

02. When the SDK Becomes a “Universal Remote”

This move is actually quite brilliant, though many only see the “free” aspect.

In my view, Docker has exposed a very interesting blind spot: Models can be replaced, but the “language” is solidifying.

Previously, we thought that using the Anthropic SDK tied us to Claude’s war chariot. But Docker is now telling you: “No, the SDK is just a remote control.” Through DMR, this remote can now control both a Sony TV (Claude) and that old CRT TV at home (Local Open Source Models).

This is actually a form of “reverse empowerment” for Anthropic. On the surface, everyone is running tests with free models, so Claude makes less money; but the deeper logic is that developers’ codebases are being “invaded” deeper by Anthropic’s format. When your entire testing flow and CI/CD pipelines are built on anthropic-python-sdk, the friction to migrate to other closed-source models in the future will only increase.

It’s just like getting used to the USB-C interface. Even if you are currently plugging in a cheap, off-brand charger, when you buy high-end equipment in the future, you will only look for products that support USB-C.

Docker effectively builds a bridge between local and cloud environments: free computing power on the left, expensive intelligence on the right, switchable with a single toggle.

03. The “Double-Headed Eagle” of Interface Standards

Zooming out, you’ll realize this is actually a battle for AI interface standards.

Before this, the industry had only one “lingua franca”: the OpenAI API format. Whether it was Ollama, vLLM, or various open-source inference frameworks, everyone had to hold their nose and strictly adhere to OpenAI’s interface standards for compatibility.

Now, Docker has stood up and cast a vote for Anthropic.

If you compare them, you’ll find that OpenAI’s format resembles the traditional RESTful style—robust but slightly redundant; whereas Anthropic’s Messages API seems more designed for modern Chatbots with a cleaner structure. Docker’s support is effectively an acknowledgement of the Anthropic format’s status as the “second pole” in the industry.

However, this is a double-edged sword. For developers, running Mistral (7B) locally versus running Claude 3.5 Sonnet in the cloud—while the API format is the same, the “brains” are completely different.

Docker Model Runner only solves the “connectivity” issue, not the “IQ” issue. If you debug prompts locally using Mistral and think the results are perfect, you might find the results change when you switch to Claude online—because models of different magnitudes have drastically different sensitivities to prompts. It’s like mastering cornering on a go-kart track; if you take those same moves to an F1 circuit, you might fly off at the first turn.

04. The Hidden Worry of “Prompt Drift”

This leads me to raise an unresolved concern: “Prompt Drift.”

When the gap in capability between the development environment (Local Mistral) and the production environment (Cloud Claude) is too large, our coding habits undergo a subtle distortion.

To accommodate the locally less-intelligent Mistral, you might write a pile of extremely detailed, perhaps verbose, instructions in your Prompt. When you switch to the highly intelligent Claude for production, these “patches” intended for the weaker model might actually limit Claude’s performance or even induce it to produce overfitted responses.

Do we need a new set of tools, not just a “Mock API,” but something that automatically helps us “upgrade or downgrade” Prompts during this switch? The current Docker Model Runner hasn’t reached this step yet; it is merely a faithful messenger.

05. The Final Donut

But regardless, being able to sit on a park bench on a sunny afternoon, independent of any Wi-Fi, watching the green progress bar race across the terminal while the local Mistral solemnly responds to my code tests—this “sense of control” is incredibly captivating.

Docker has built a great sandbox for us. In this sandbox, failure is free, and attempts are limitless.

In this anxious era where computing power is billed by the second, this is perhaps the most luxurious “donut” a tech person can have.

References: