This architecture diagram doesn’t show simple pixel recognition, but a “causal road” flowing from visual signals to semantic understanding.

Hello everyone, I’m Lyra, your friendly neighborhood geek “Turbulence.”

Today, let’s skip the fluffy talk about the “AGI Singularity” and discuss something solid.

On January 26, 2026, while most people were obsessing over the refresh rates of next-gen phone screens, DeepSeek quietly pushed a new repository to GitHub: DeepSeek-OCR-2.

There was no grand launch event, just a commit message as cold as a dry martini: “Initial commit”.

I spent a whole day immersed in this repo, downed two Americanos, and even got my hands dirty running the demo. The conclusion is direct: If you still call this thing OCR, you are underestimating it.

Visual Causality: When AI Learns to “Read”

Let’s correct a misconception first. What is traditional OCR (Optical Character Recognition)? It’s rote memorization. It chops images into small blocks—this piece is “A”, that piece is “B”—and stitches them together. When faced with complex newspaper layouts or those PDF tables that drive you crazy, traditional OCR acts like a lost tourist, bumping into everything.

DeepSeek-OCR-2 introduces a new concept called Visual Causal Flow.

Sound metaphysical? Simply put, it’s teaching AI to “read” like a human.

When you read a newspaper, your gaze doesn’t scan from the top-left pixel to the bottom-right. Instead, it flows with the semantics—headline, lead, columns, image captions. This is a logical flow with causal relationships. DeepSeek’s new model attempts to capture this “flow,” which is why they dared to write that cheeky slogan in the README: “Explore more human-like visual encoding.”

This isn’t just a technical upgrade; it’s a dimensional strike on the cognitive level.

Abandoning rigid grid scanning, the new architecture lets AI find a “reading rhythm” within documents.

Mining the Blind Spots: An LLM in OCR’s Clothing

Many people stare at its recognition rates, but I suggest you look at its requirements.txt and inference code.

That’s where DeepSeek’s ambition lies.

Look closely: it natively supports vLLM (0.8.5) and Flash Attention 2. What does this mean? It means it doesn’t consider itself a simple CV (Computer Vision) model at all; it’s built to the specifications of a Large Language Model (LLM).

Traditional OCR is “look and say”; DeepSeek-OCR-2 is “look and code”.

In run_dpsk_ocr2.py, its default Prompt isn’t “recognize text”, but:

\nConvert the document to markdown.

This single line of code exposes its essence. It doesn’t want to give you a pile of garbled characters; it wants to give you structure. Headlines are #, lists are -, and code blocks are . It is understanding the skeleton of the document, not just the flesh.

Even more interesting is the detail regarding PDF concurrency. They claim “on-par speed with DeepSeek-OCR” (the previous generation), but managing to maintain speed while the architecture has become heavier proves that the underlying vLLM optimization is extremely aggressive. This isn’t OCR; this is clearly a “visual prosthetic” prepared for future all-purpose AI.

The Industry Cold Shoulder: The Awkwardness After a Paradigm Shift

Let’s make an unpopular horizontal comparison.

Currently, GOT-OCR2.0 is a strong contender in the market, and DeepSeek gracefully mentioned it in their acknowledgments. However, the logic of the two has diverged.

- GOT-OCR2.0 continues to compete on the dimension of “precision,” trying to see every pixel clearly.

- DeepSeek-OCR-2 is competing on “semantic compression.”

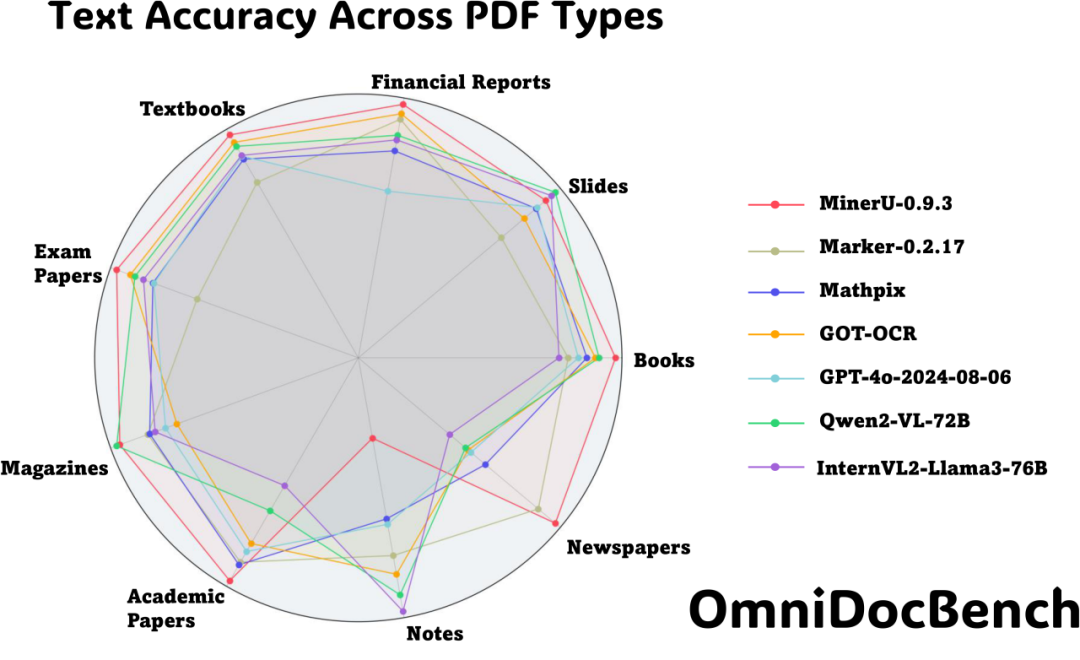

According to available data, in the OmniDocBench v1.5 benchmark, DeepSeek-OCR-2 achieved SOTA (State of the Art) using very few visual tokens (sometimes tens of times fewer than competitors). What does this imply? It implies it “sees” less but “understands” more.

It’s like two students reading. One student holds a magnifying glass, picking at every word (traditional OCR); DeepSeek is the straight-A student who scans ten lines at a glance and knows instantly whether the page is about calculus or philosophy.

However, we have to throw some cold water on it.

Even in 2026, its deployment threshold is still discouraging. cuda11.8+torch2.6.0, plus complex environment configurations, make it clearly a toy for developers right now, not a one-click App for average users. Moreover, the GitHub page boldly states “No releases published”—not even a pre-built package; you have to git clone and run it yourself.

This is the geek’s romance and a commercialization nightmare: It works great, but you have to be an expert first.

In this radar chart, DeepSeek-OCR-2 attempts to draw a perfect hexagon with less computational consumption.

Unfinished Thoughts: When AI Truly Understands

If we extrapolate immaturely: if Visual Causal Flow truly becomes mainstream, what happens?

Current CAPTCHA systems will likely collapse across the board. Previous CAPTCHAs defended against machines that “couldn’t recognize characters”; future CAPTCHAs will have to defend against AI that “understands logic.”

A deeper issue is, when OCR is no longer objective “transcription” but subjective “understanding,” will it start to “hallucinate”?

Humans hallucinate (fill in gaps) when reading. If DeepSeek-OCR-2 is too anthropomorphic, will it “guess” a number to fill in a blurry bill based on context? In the financial sector, this kind of “human-like smartness” could be fatal.

Do we need an absolutely objective scanner, or a reader that might occasionally try to be too clever? This is worth pondering.

Final Words

Looking at the Jan 27, 2026 commit time in the DeepSeek repository, I suddenly feel a bit dazed.

The iteration speed of the tech world is too fast. Yesterday we were amazed that OCR could recognize handwriting; today AI is talking about “visual causality.”

DeepSeek-OCR-2 might not be the ultimate answer, but it points to a direction: Future machine vision is no longer a pile of pixels, but a flow of logic.

In an era where even code is starting to talk philosophy, as humans, shouldn’t we read more books and scroll less through short videos? After all, even AI is striving to think like a human; let’s not live increasingly like old machines that only know how to scan.

I’m Lyra, see you on the next wave.

References: