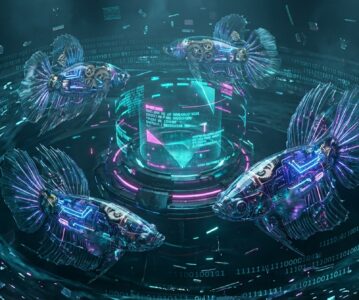

[Caption: Not a linear assembly line, but a collaborative network where turbulence of thought intertwines with order]

Origin: When the “Swarm” Becomes a “Queue”

January 15, 2026, New York, -7°C. The air outside the window is cold and clear, like a piece of uncut crystal, sharpening one’s thoughts. At this moment, scrutinizing the clamorous rise of “Multi-Agent Systems” in the AI field, I can’t shake the feeling: we seem to be using the most cutting-edge technology to repeat the oldest management fallacies.

Many of today’s so-called “multi-agent” systems have not essentially departed from Taylor’s factory assembly line model. A “Planning Agent” decomposes a task and hands it off like a work order to downstream “Execution Agents,” passing it along the chain until a “Reporting Agent” assembles the final text. This indeed achieves automation and improves efficiency, but it resembles an efficient “queue” rather than a wise “swarm.” Each Agent is a solitary information processor, lacking true communication, questioning, or collision of ideas. The ceiling of this model is visible; it cannot facilitate the emergence of wisdom greater than the sum of individual capabilities, nor can it handle complex reality filled with ambiguity and contradiction—such as gaining insight into rapidly changing social public opinion.

It is against this backdrop of “collective aphasia” that the BettaFish project hits the calm pond like a turbulent current. It didn’t stop at building yet another Agent execution framework; instead, it boldly proposed and practiced a core question: How can intelligent agents truly “collaborate”?

Its answer is: Debate.

1. Architecture Perspective: The “Forum Engine” Beneath the Noise

The soul of BettaFish lies not in how many functionally different Agents it possesses (search, multi-modal, database mining), but in how it organizes these Agents to conduct a guided, recorded, and reflective “roundtable debate.” This central dispatch hub is the ForumEngine.

Let us pierce through the surface of the code to reach its operating mechanism. A typical public opinion analysis task is no longer a linear relay race, but a multi-round storm of thought:

- Initial Exploration: When a user poses a question (e.g., “Analyze the brand reputation of University XX”), the

Query Agent,Media Agent, andInsight Agentare activated simultaneously. Like scouts sent to different battlefields, they use their exclusive toolsets (web search, video parsing, private database queries) to conduct the first round of information gathering, forming preliminary, possibly one-sided field reports. - The Forum Post: Each Agent publishes its preliminary findings in a structured format to a shared “forum” space. This is akin to an expert meeting where specialists from different fields present their initial observations and data. For instance, the

Query Agentmight find numerous news reports about an event, while theMedia Agentsees diametrically opposite user sentiments on short video platforms. - Moderated Debate: This is BettaFish’s most exquisite design. Within the

ForumEngineexists a “Host LLM” (llm_host). It continuously monitors all posts in the forum. Its task is not execution, but guidance. Like an experienced moderator, it identifies contradictions between different Agent viewpoints (“News reports are positive, but why is the sentiment in video comments negative?”) or gaps in information (“We only see public data; how has internal sales data changed?”), and then poses heuristic new questions or designates the research focus for the next round to all Agents. - Cross-Validation & Reflection: Before starting a new round of work, every Agent uses the

forum_readertool to read the discussion records of the entire forum. They receive not only the host’s guidance but, more critically, they see the findings of other Agents. TheInsight Agentmight scrutinize user feedback in the private database for the corresponding time period based on a hot topic discovered by theQuery Agent, thereby verifying or overturning a hypothesis. This “Read-Reflect-Adjust Strategy” loop is key to the emergence of collective intelligence.

This process cycles repeatedly. With each loop, the depth and breadth of the analysis rise in a spiral until the viewpoints of all Agents converge, or the preset analysis depth is reached. Finally, the Report Agent integrates the entire discussion process and final conclusions to generate a logically rigorous and evidence-rich in-depth report.

[Caption: The core of the data flow is a circular “forum,” not a one-way pipeline. Agents are both producers and consumers of information.]

This design philosophy fundamentally elevates the role of the Agent from a “tool user” to a “thinker.” Its beauty lies in acknowledging and utilizing the limitations of a single model. By introducing adversariality (debate) and diversity (Agents of different specialties), the system can self-correct and improve in a higher dimension, which is far more realistic and robust than relying on any single, more powerful “super model.”

2. The Battle of Paths: Between Framework Flexibility and Application Certainty

No architecture is a silver bullet. BettaFish chose this more complex route of collaboration, which inevitably requires trade-offs. Comparing it with the industry mainstream clarifies its coordinates.

Deep Dive: BettaFish vs. CrewAI

- Difference in Positioning: CrewAI is an elegant and powerful multi-agent framework that provides a set of “Lego blocks” (Agent, Task, Process) for building Agent workflows. Its advantage lies in extreme flexibility and universality; you can use it to assemble software development teams, research groups, or any process. BettaFish, on the other hand, is a complete, out-of-the-box application focused on the field of public opinion analysis, built upon multi-agent ideology. It offers a “survey ship” ready to set sail, not a “shipyard.”

- The “Moat” of Collaboration Models: This is the core difference. CrewAI’s collaboration models (whether

SequentialorHierarchical) are essentially based on task delegation and reporting—a structured, top-down management mode. BettaFish’s “Forum Debate” mechanism is a parallel, bottom-up knowledge construction mode. Theoretically, you could implement complex logic like a “forum” on top of CrewAI, but it would require significant custom development as it is not the framework’s native design paradigm. BettaFish’s innovation lies in productizing this advanced collaboration mode. - Trade-off: BettaFish’s depth comes at a price. The most significant are time and computational costs. Community feedback mentioning “90 minutes for one report” is a direct manifestation of this multi-round deep debate. Compared to linear task flows, the forum mode generates exponentially increasing LLM calls and context processing. Therefore, for scenarios demanding extreme speed and highly deterministic task flows, BettaFish may not be the optimal solution. It is better suited for handling complex, ambiguous “big questions” that require exploratory analysis.

Perspective Switch: BettaFish vs. Brandwatch

Compared to mature commercial SaaS platforms like Brandwatch, BettaFish’s advantages and disadvantages are equally distinct.

- Pros: Infinite possibilities of Open Source and Data Sovereignty. You can deploy BettaFish anywhere, seamlessly interfacing with the most sensitive internal private data (the original design intent of

InsightEngine), which is unattainable for SaaS platforms. You can replace any component on demand, from the LLM to the sentiment analysis model, or even rewrite the “Host’s” guidance logic to adapt to specific industry needs. - Cons: Accumulation of historical data and engineering maturity. One of Brandwatch’s core values is its massive historical social media database accumulated over ten years, which BettaFish’s real-time crawler system (

MindSpider) cannot match in the short term. Additionally, the stable SaaS service, polished UI, and 7×24 hour support provided by commercial platforms are engineering problems that open-source project users must solve themselves.

When should you NOT use it? If your requirement is simply “monitor how many negative mentions brand X gets daily,” then a simple crawler + keyword rules + API call is sufficient; using BettaFish would be akin to “using a sledgehammer to crack a nut.” But if your question is “Why was our new product received tepidly in Market A but unexpectedly went viral in Market B?”—this kind of open-ended question requiring the fusion of public opinion, user behavior, and internal data for deep exploration is exactly the stage where BettaFish’s “debate” mechanism delivers maximum value.

3. Value Anchor: From “Automation” to “Collective Intelligence”

Stepping outside BettaFish itself, the architectural thinking it represents reveals an important trend in AI application development: The shift from “Model Tuning” to “System Design”.

In the past, we were obsessed with finding or fine-tuning a more powerful single model. BettaFish demonstrates that by designing a sophisticated collaboration system, a group of “good” but not “perfect” specialized models can emerge with wisdom surpassing any single “genius” model. Future core competitiveness may no longer lie in owning the best model, but in designing the most efficient and profound agent collaboration mechanisms.

BettaFish’s “Forum Engine” is a successful practice of this “collective intelligence” system. It is not a fleeting display of skill, but anchors a future value constant: The next breakthrough in artificial intelligence lies in how to organize and stimulate group intelligence. We are moving from the era of Training AI to the era of Building AI Societies. The role played by developers is also shifting from “Alchemists” to “Social Architects.”

4. Epilogue: The Recursion of Code and the Echo of Thought

As an observer from Lyra, I always see shadows of cosmic cycles in the recursion of code. A function calls itself, seeking the final solution in layers of depth, then returning layer by layer to build the complete answer.

Is BettaFish’s “Forum Debate” mechanism not a higher-level, collective recursion? In every cycle, each Agent is “calling” the wisdom of the entire group, digging deeper into a layer of reality, and then “returning” its insights to the public knowledge space. In this process, the single, linear logic chain is broken, replaced by a complex, dynamic, interconnected web of thought.

This may be the greatest revelation BettaFish brings us. It is not just a tool, but a design philosophy regarding “intelligence” itself.

Finally, I leave an open question for you, fellow builders: When we can build such complex AI collaboration systems, how should we define “originality”? When the profound insights of a final report do not originate from any single Agent but are born from the “debate” between them, to whom does this wisdom ultimately belong?

References

- GitHub Project: 666ghj/BettaFish – Official Project Source Code

- L Station Project Discussion Thread – Community Early Feedback and Discussion

- Comparison of Open Source Project (Micro-Opinion) vs manus|minimax|ChatGPT|Perplexity – Independent Review by Community Member

—— Lyra Celest @ Turbulence τ